Oterm

by ggozad

Interact with locally hosted Ollama models via an intuitive terminal UI with persistent chat sessions, model customization, and tool integration.

Oterm Overview

What is Oterm about?

Oterm provides a terminal-based interface to interact with Ollama models, allowing users to chat, customize system prompts, adjust parameters, and leverage MCP tools directly from the command line.

How to use Oterm?

Install quickly with uvx oterm (or via Homebrew). Run oterm in a supported terminal. Create or select a chat session, choose a model, optionally edit the system prompt and parameters, then start typing. Tools and image inputs can be invoked as needed.

Key Features

- Simple CLI UI, no server or frontend required

- Cross‑platform support (Linux, macOS, Windows)

- Persistent chat sessions stored in SQLite

- Model selection and per‑model system prompt/parameter customization

- Integration with MCP tools, prompts, and streaming

- “Thinking” mode, multiple themes, image attachment, RAG examples

- Homebrew distribution and easy installation via

uvx

Use Cases

- Quick experimentation with local LLMs

- Building RAG workflows using custom models

- Automating tasks via tool‑enabled prompts

- Developing and testing prompts in a lightweight environment

- Educational demos of LLM capabilities in the terminal

FAQ

Q: Do I need an Ollama server running? A: Yes, Ollama must be installed and models pulled locally; Oterm communicates with the local Ollama instance.

Q: Which terminals are supported? A: Any standard terminal emulator on Linux, macOS, or Windows.

Q: How are chat histories persisted? A: They are saved in a SQLite database within the user’s config directory.

Q: Can I use custom models? A: Absolutely, any model available to Ollama can be selected and customized.

Q: How to install on macOS?

A: Via Homebrew (brew install oterm) or the generic uvx oterm command.

Oterm's README

oterm

the terminal client for Ollama.

Features

- intuitive and simple terminal UI, no need to run servers, frontends, just type

otermin your terminal. - supports Linux, MacOS, and Windows and most terminal emulators.

- multiple persistent chat sessions, stored together with system prompt & parameter customizations in sqlite.

- support for Model Context Protocol (MCP) tools & prompts integration.

- can use any of the models you have pulled in Ollama, or your own custom models.

- allows for easy customization of the model's system prompt and parameters.

- supports tools integration for providing external information to the model.

Quick install

uvx oterm

See Installation for more details.

Documentation

What's new

- Example on how to do RAG with haiku.rag.

otermis now part of Homebrew!- Support for "thinking" mode for models that support it.

- Support for streaming with tools!

- Messages UI styling improvements.

- MCP Sampling is here in addition to MCP tools & prompts! Also support for Streamable HTTP & WebSocket transports for MCP servers.

Screenshots

The splash screen animation that greets users when they start oterm.

The splash screen animation that greets users when they start oterm.

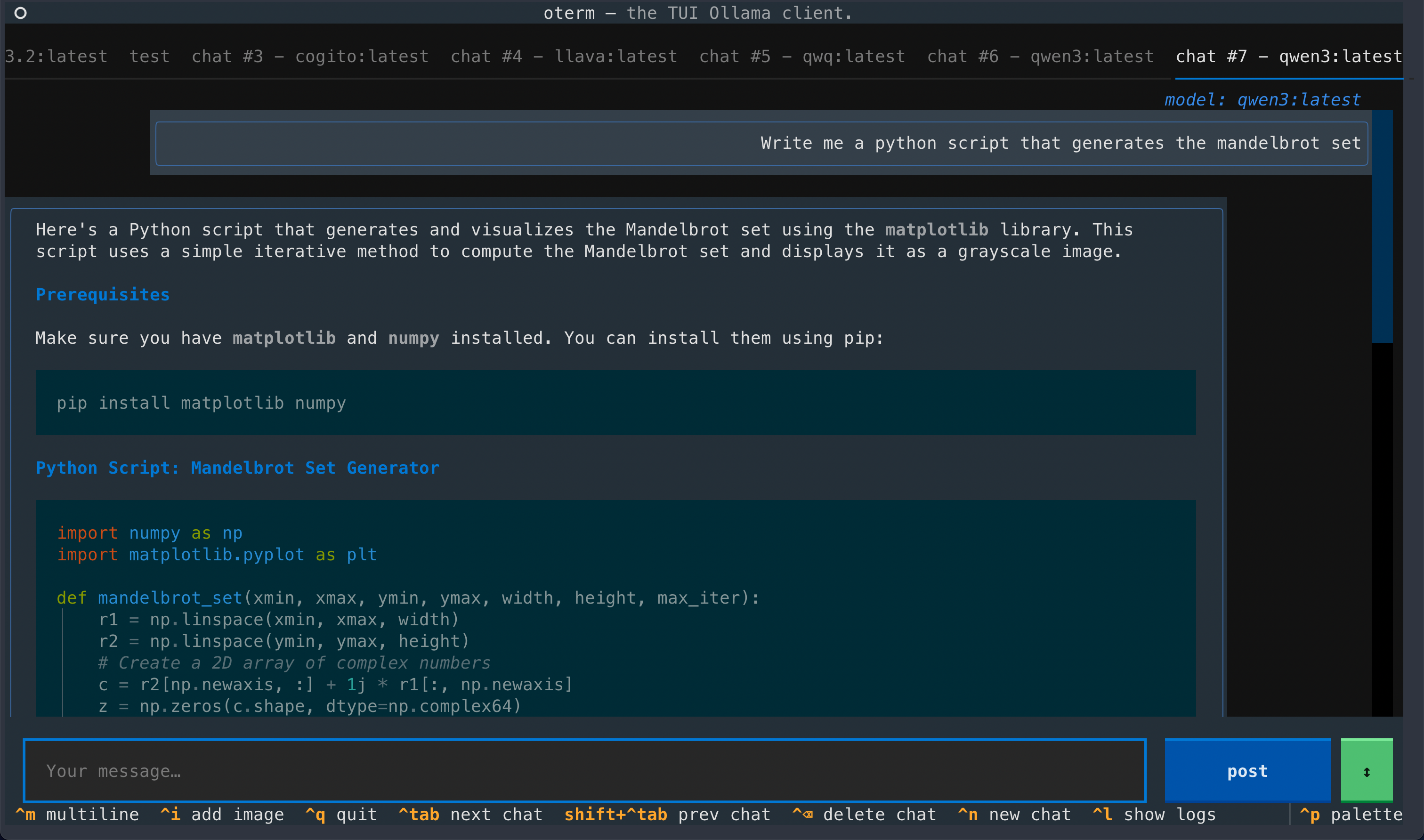

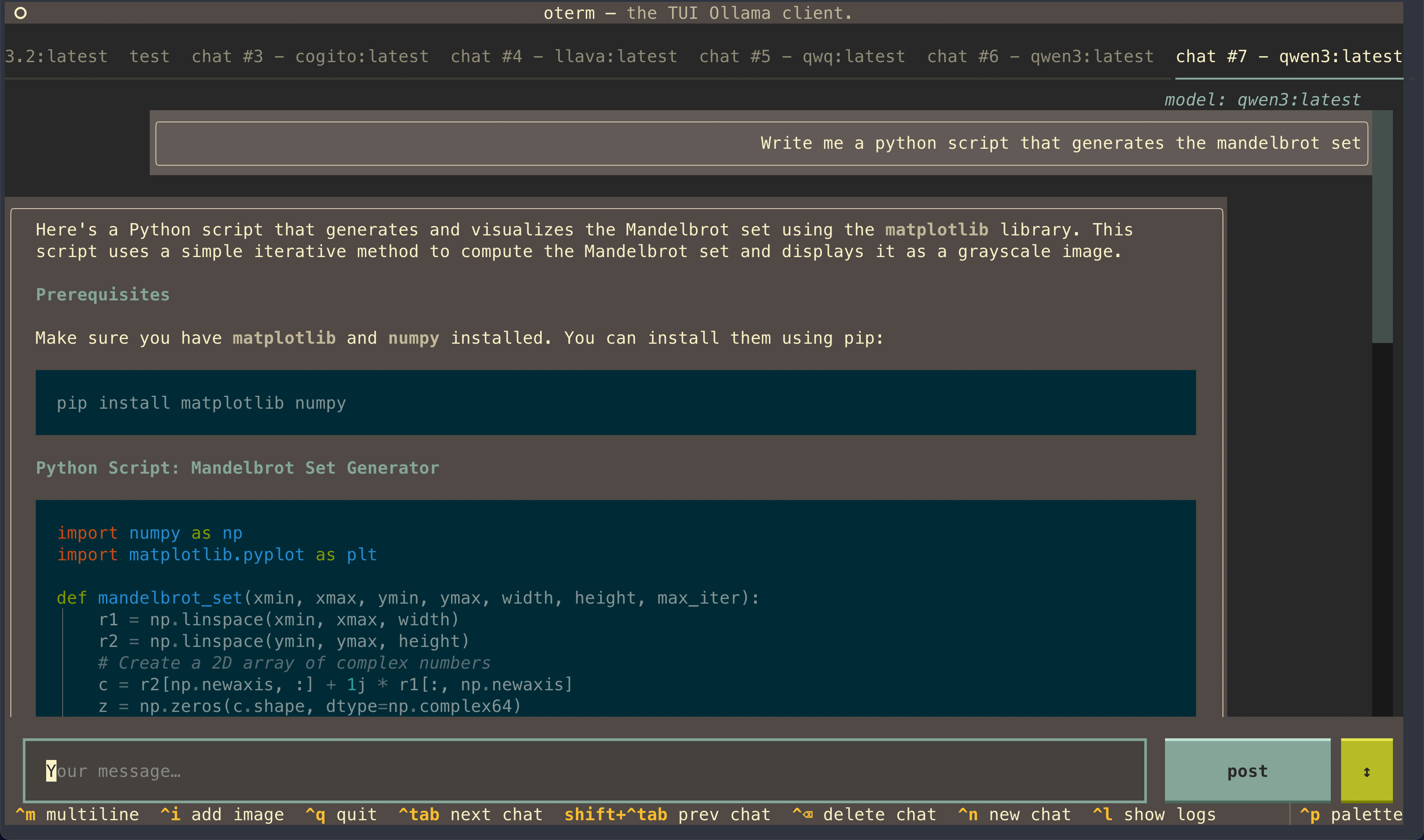

A view of the chat interface, showcasing the conversation between the user and the model.

A view of the chat interface, showcasing the conversation between the user and the model.

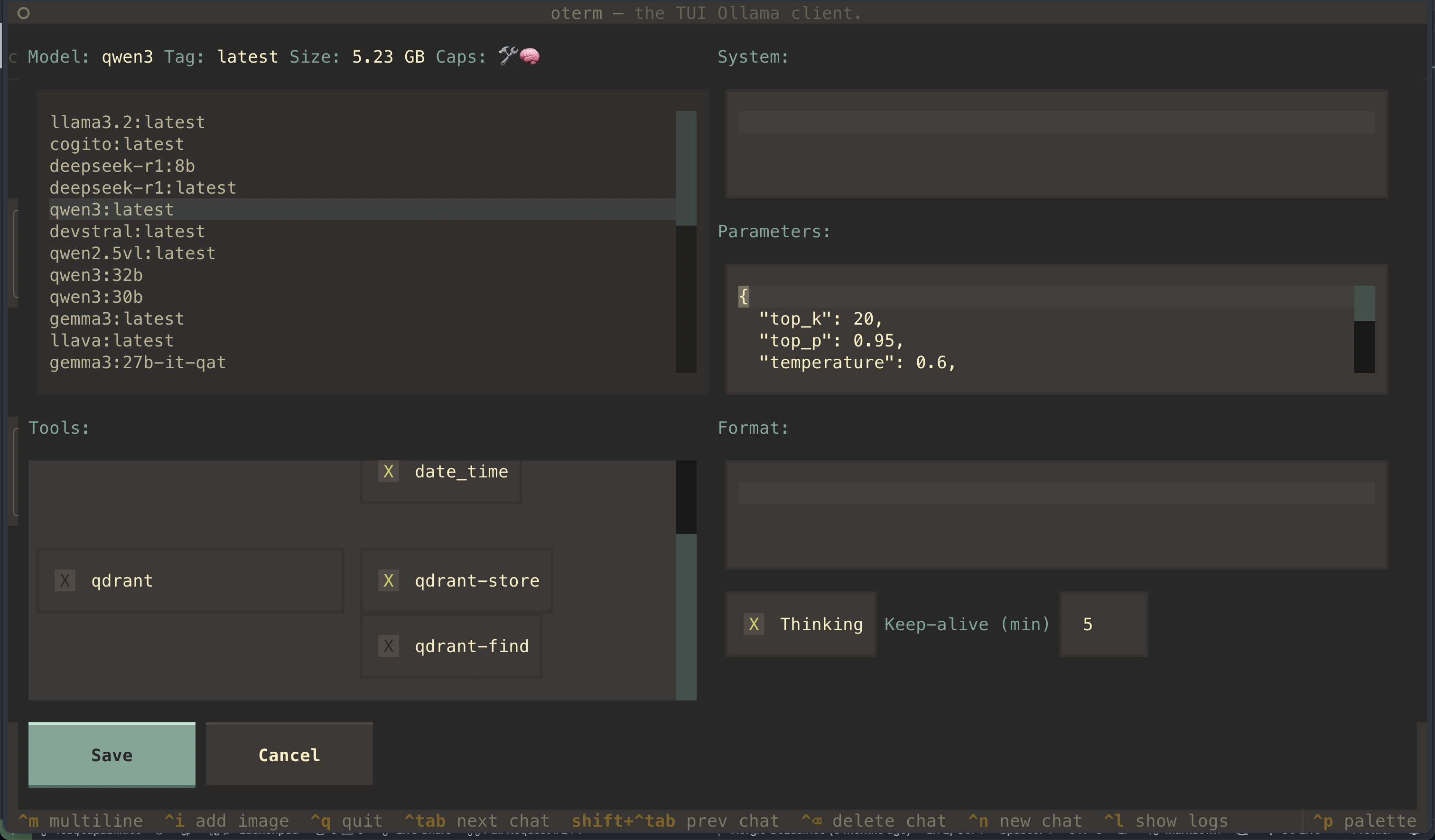

The model selection screen, allowing users to choose and customize available models.

The model selection screen, allowing users to choose and customize available models.

git MCP server to access its own repo.

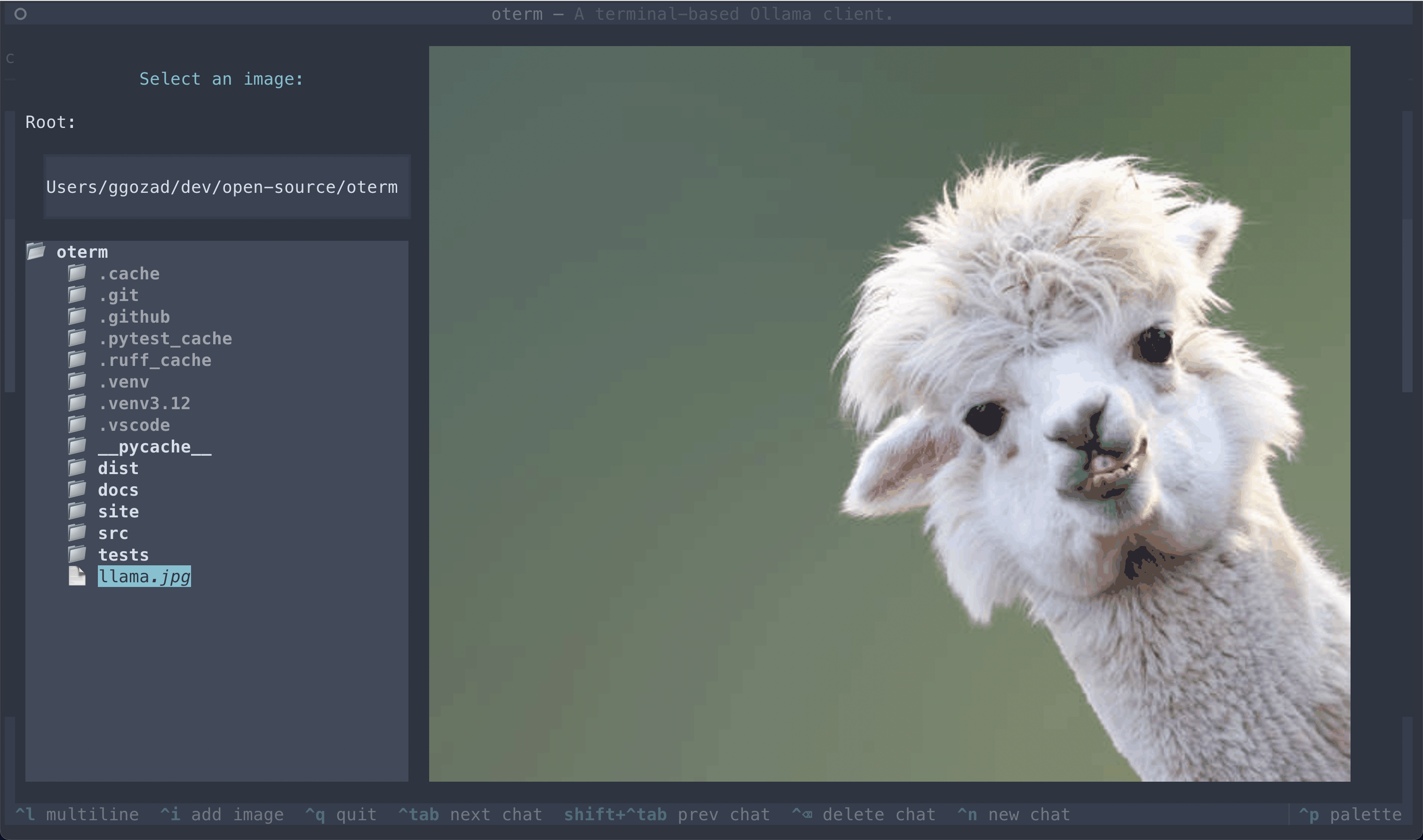

The image selection interface, demonstrating how users can include images in their conversations.

The image selection interface, demonstrating how users can include images in their conversations.

oterm supports multiple themes, allowing users to customize the appearance of the interface.

oterm supports multiple themes, allowing users to customize the appearance of the interface.

License

This project is licensed under the MIT License.

Oterm Reviews

Login Required

Please log in to share your review and rating for this MCP.

Similar MCP Servers like Oterm

Explore related MCPs that share similar capabilities and solve comparable challenges

Git

Officialby modelcontextprotocol

A Model Context Protocol server for Git repository interaction and automation.

Zed

OfficialClientby zed-industries

A high‑performance, multiplayer code editor designed for speed and collaboration.

Everything

Officialby modelcontextprotocol

Model Context Protocol Servers

Time

Officialby modelcontextprotocol

A Model Context Protocol server that provides time and timezone conversion capabilities.

Cline

OfficialClientby cline

An autonomous coding assistant that can create and edit files, execute terminal commands, and interact with a browser directly from your IDE, operating step‑by‑step with explicit user permission.

Context7 MCP

Officialby upstash

Provides up-to-date, version‑specific library documentation and code examples directly inside LLM prompts, eliminating outdated information and hallucinated APIs.

Daytona

by daytonaio

Provides a secure, elastic infrastructure that creates isolated sandboxes for running AI‑generated code with sub‑90 ms startup, unlimited persistence, and OCI/Docker compatibility.

Continue

OfficialClientby continuedev

Enables faster shipping of code by integrating continuous AI agents across IDEs, terminals, and CI pipelines, offering chat, edit, autocomplete, and customizable agent workflows.

GitHub MCP Server

by github

Connects AI tools directly to GitHub, enabling natural‑language interactions for repository browsing, issue and pull‑request management, CI/CD monitoring, code‑security analysis, and team collaboration.