OpenSumi

by opensumi

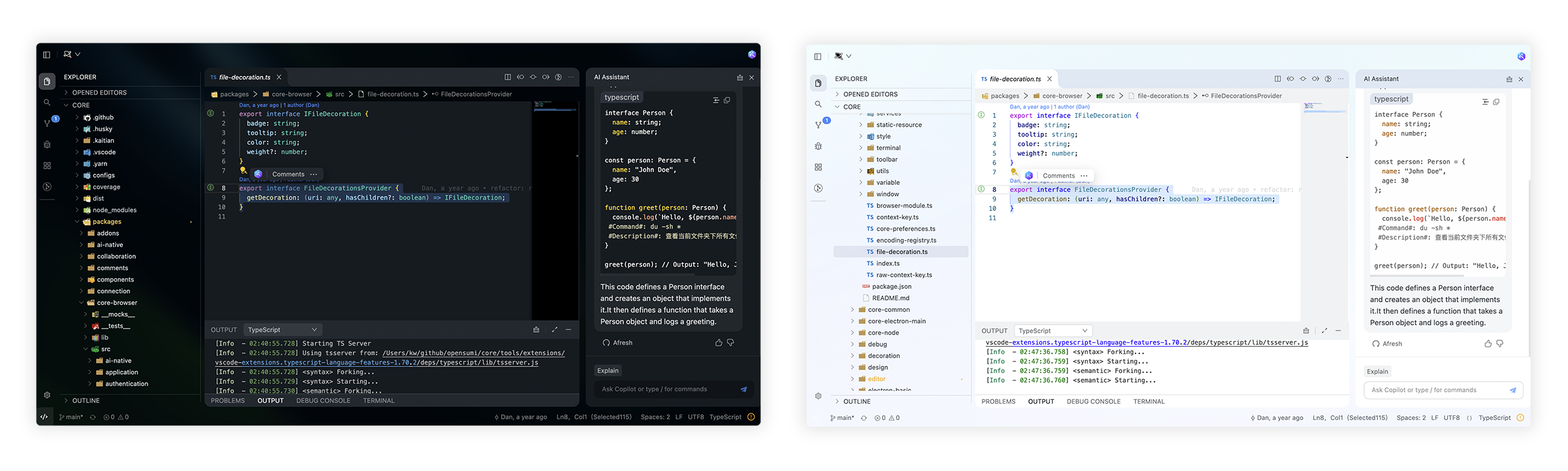

A framework for rapidly building AI‑native IDE products, offering extensible editor components, Electron desktop support, and MCP client integration.

OpenSumi Overview

What is OpenSumi about?

OpenSumi provides a modular, extensible platform to create IDE‑style applications that are AI‑first and AI‑native. It bundles core editor services, plugin architecture, and support for Model Context Protocol (MCP) tools, enabling developers to focus on product features rather than infrastructure.

How to use OpenSumi?

# Install dependencies

yarn install

# Initialize the workspace

yarn run init

# (Optional) download built‑in extensions

yarn run download-extension

# Start the development server

yarn run start

By default the project opens the tools/workspace folder. To launch a different workspace you can set the MY_WORKSPACE environment variable:

MY_WORKSPACE=/path/to/your/project yarn run start

Refer to the CONTRIBUTING.md for environment‑setup details.

Key features of OpenSumi

- MCP client: native integration with Model Context Protocol tools.

- AI‑native architecture: designed for AI‑driven extensions and workflows.

- Cross‑platform: runs as a web IDE or an Electron‑based desktop app.

- Plugin system: supports custom extensions and marketplace‑style plugins.

- TypeScript & modern web stack: leverages React, Monaco, and other proven libraries.

- Ready‑made templates: Cloud IDE, Desktop IDE, CodeFuse AI IDE, CodeBlitz web IDE, Lite Web IDE, mini‑app style IDE.

Use cases of OpenSumi

- Building a cloud‑based development environment for SaaS platforms.

- Creating a desktop IDE with integrated AI assistants for code completion, debugging, or refactoring.

- Developing specialized IDEs (e.g., data‑science notebooks, low‑code editors) that require AI‑enhanced features.

- Extending existing IDEs with custom AI tools via the MCP client.

FAQ from the OpenSumi

Q: Do I need to run an MCP server separately? A: The framework includes an MCP client; you can connect it to any MCP server you operate. The repository does not ship a server, so you need to provide your own or use a hosted solution.

Q: Can I use OpenSumi in a pure web browser?

A: Yes. The codeblitz and ide-startup-lite templates demonstrate web‑only deployments.

Q: How do I add my own extensions?

A: Place the extension code under the extensions directory and reference it in the workspace configuration. The CLI yarn run download-extension can fetch pre‑built extensions from the marketplace.

Q: Is TypeScript required for developing plugins? A: While TypeScript is the primary language used by the core, JavaScript plugins are also supported.

Q: Where can I find documentation?

A: Full documentation is hosted at https://opensumi.com and the repo includes a docs folder with API references.

OpenSumi's README

Changelog · Report Bug · Request Feature · English · 中文

🌟 Getting Started

Here you can find some of our example projects and templates:

- Cloud IDE

- Desktop IDE - based on the Electron

- CodeFuse IDE - AI IDE based on OpenSumi

- CodeBlitz - A pure web IDE Framework

- Lite Web IDE - A pure web IDE on the Browser

- The Mini-App liked IDE

⚡️ Development

$ yarn install

$ yarn run init

$ yarn run download-extension # Optional

$ yarn run start

By default, the tools/workspace folder in the project would be opened, or you can run the project by specifying the directory in the following way:

$ MY_WORKSPACE={local_path} yarn run start

Usually, you may still encounter some system-level environment dependencies. You can visit Development Environment Preparation to see how to install the corresponding environment dependencies.

📕 Documentation

For complete documentation: opensumi.com

📍 ReleaseNotes & BreakingChanges

You can see all the releasenotes and breaking changes here: CHANGELOG.md.

🔥 Contributing

Read through our Contributing Guide to learn about our submission process, coding rules and more.

🙋♀️ Want to Help?

Want to report a bug, contribute some code, or improve documentation? Excellent! Read up on our Contributing Guidelines for contributing and then check out one of our issues labeled as help wanted or good first issue.

🧑💻 Needs some help?

Go to our issues or discussions to create a topic, it will be resolved as soon as we can.

✨ Contributors

Let's build a better OpenSumi together.

We warmly invite contributions from everyone. Before you get started, please take a moment to review our Contributing Guide. Feel free to share your ideas through Pull Requests or GitHub Issues.

📃 License

Copyright (c) 2019-present Alibaba Group Holding Limited, Ant Group Co. Ltd.

Licensed under the MIT license.

This project contains various third-party code under other open source licenses.

See the NOTICE.md file for more information.

OpenSumi Reviews

Login Required

Please log in to share your review and rating for this MCP.

Similar MCP Servers like OpenSumi

Explore related MCPs that share similar capabilities and solve comparable challenges

Git

Officialby modelcontextprotocol

A Model Context Protocol server for Git repository interaction and automation.

Zed

OfficialClientby zed-industries

A high‑performance, multiplayer code editor designed for speed and collaboration.

Everything

Officialby modelcontextprotocol

Model Context Protocol Servers

Time

Officialby modelcontextprotocol

A Model Context Protocol server that provides time and timezone conversion capabilities.

Cline

OfficialClientby cline

An autonomous coding assistant that can create and edit files, execute terminal commands, and interact with a browser directly from your IDE, operating step‑by‑step with explicit user permission.

Context7 MCP

Officialby upstash

Provides up-to-date, version‑specific library documentation and code examples directly inside LLM prompts, eliminating outdated information and hallucinated APIs.

Daytona

by daytonaio

Provides a secure, elastic infrastructure that creates isolated sandboxes for running AI‑generated code with sub‑90 ms startup, unlimited persistence, and OCI/Docker compatibility.

Continue

OfficialClientby continuedev

Enables faster shipping of code by integrating continuous AI agents across IDEs, terminals, and CI pipelines, offering chat, edit, autocomplete, and customizable agent workflows.

GitHub MCP Server

by github

Connects AI tools directly to GitHub, enabling natural‑language interactions for repository browsing, issue and pull‑request management, CI/CD monitoring, code‑security analysis, and team collaboration.