Chat Mcp

by AI-QL

Cross‑platform desktop interface for interacting with multiple LLM backends via MCP, supporting dynamic configuration, multi‑client management, and reusable UI for both desktop and web environments.

Chat Mcp Overview

What is Chat Mcp about?

Chat Mcp provides a minimalistic, Electron‑based desktop application that connects to various Large Language Model (LLM) services through the Model Context Protocol (MCP). It offers a clean codebase for learning MCP fundamentals while allowing developers to quickly test and switch between different LLM providers.

How to use Chat Mcp?

- Clone the repository and navigate to the project folder.

- Edit src/main/config.json to set the desired

commandandargsfor the MCP server (e.g., filesystem, puppeteer, or custom servers). - Ensure Node.js (>=14) and npm are installed (

node -v/npm -v). - Install dependencies:

npm install - Start the application:

npm start - (Optional) Build a packaged desktop app for the current OS:

npm run build-app

Key features of Chat Mcp

- Cross‑Platform Compatibility – Runs on Linux, macOS, and Windows via Electron.

- Apache‑2.0 License – Free to modify and redistribute.

- Dynamic LLM Configuration – Supports any OpenAI‑SDK‑compatible model; configuration files (

gtp-api.json,qwen-api.json,deepinfra.json) let you switch backends instantly. - Multi‑Client Management – Define and manage multiple MCP clients to connect to different servers simultaneously.

- UI Reusability – The same UI can be extracted for web deployments, ensuring consistent interaction logic.

- Built‑in Troubleshooting – Guides for common issues such as npm/Electron timeouts, Windows

npxproblems, and RPM build errors.

Use cases of Chat Mcp

- Rapid prototyping of LLM‑driven features without building a full web stack.

- Testing multiple LLM providers (OpenAI, Qwen, DeepInfra, etc.) in a single environment.

- Educational tool for developers learning MCP concepts and client‑server interactions.

- Local development sandbox for debugging function‑calling, tool visualization, and multimodal capabilities.

FAQ from the Chat Mcp community

Q: What if npm install stalls during Electron download?

A: Set the ELECTRON_MIRROR environment variable to a reachable mirror (e.g., a regional CDN) before running npm install.

Q: How can I run an MCP server on Windows when npx fails?

A: Replace the command in config.json with node and point args to the compiled server script, as shown in the README example.

Q: My build process times out with electron‑builder.

A: Clear the cache under C:\Users\<User>\AppData\Local\electron and electron-builder, then retry using the system shell instead of VSCode's terminal.

Q: Where can I find pre‑built server packages?

A: The project installs server-everything, server-filesystem, and server-puppeteer by default. Additional servers can be added via npx <package>.

Q: How do I add my own LLM endpoint?

A: Create a new JSON config (e.g., myapi.json) following the structure of the provided examples and reference it in the UI's settings.

Chat Mcp's README

MCP Chat Desktop App

A Cross-Platform Interface for LLMs

This desktop application utilizes the MCP (Model Context Protocol) to seamlessly connect and interact with various Large Language Models (LLMs). Built on Electron, the app ensures full cross-platform compatibility, enabling smooth operation across different operating systems.

The primary objective of this project is to deliver a clean, minimalistic codebase that simplifies understanding the core principles of MCP. Additionally, it provides a quick and efficient way to test multiple servers and LLMs, making it an ideal tool for developers and researchers alike.

News

This project originated as a modified version of Chat-UI, initially adopting a minimalist code approach to implement core MCP functionality for educational purposes.

Through iterative updates to MCP, I received community feedback advocating for a completely new architecture - one that eliminates third-party CDN dependencies and establishes clearer modular structure to better support derivative development and debugging workflows.

This led to the creation of Tool Unitary User Interface, a restructured desktop application optimized for AI-powered development. Building upon the original foundation, TUUI serves as a practical AI-assisted development paradigm, if you're interested, you can also leverage AI to develop new features for TUUI. The platform employs a strict linting and formatting system to ensure AI-generated code adheres to coding standards.

📢 Update: June 2025

The current project refactoring has been largely completed, and a pre-release version is now available. Please refer to the following documentation for details:

Features

-

Cross-Platform Compatibility: Supports Linux, macOS, and Windows.

-

Flexible Apache-2.0 License: Allows easy modification and building of your own desktop applications.

-

Dynamic LLM Configuration: Compatible with all OpenAI SDK-supported LLMs, enabling quick testing of multiple backends through manual or preset configurations.

-

Multi-Client Management: Configure and manage multiple clients to connect to multiple servers using MCP config.

-

UI Adaptability: The UI can be directly extracted for web use, ensuring consistent ecosystem and interaction logic across web and desktop versions.

Architecture

Adopted a straightforward architecture consistent with the MCP documentation to facilitate a clear understanding of MCP principles by:

How to use

After cloning or downloading this repository:

-

Please modify the

config.jsonfile located in src/main.

Ensure that thecommandandpathspecified in theargsare valid. -

Please ensure that Node.js is installed on your system.

You can verify this by runningnode -vandnpm -vin your terminal to check their respective versions. -

npm install -

npm start

Configuration

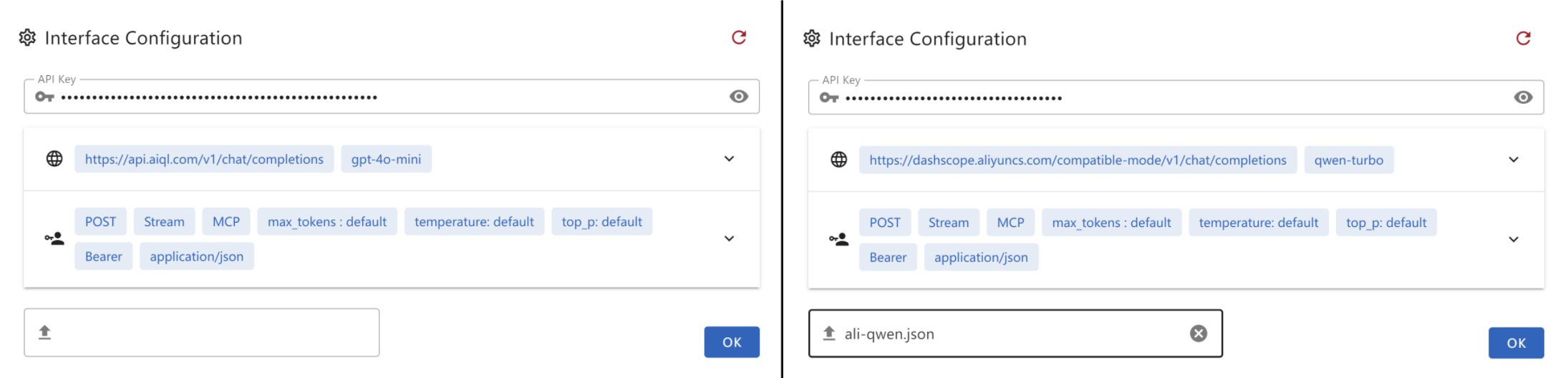

Create a .json file and paste the following content into it. This file can then be provided as the interface configuration for the Chat UI.

-

gtp-api.json{ "chatbotStore": { "apiKey": "", "url": "https://api.aiql.com", "path": "/v1/chat/completions", "model": "gpt-4o-mini", "max_tokens_value": "", "mcp": true }, "defaultChoiceStore": { "model": [ "gpt-4o-mini", "gpt-4o", "gpt-4", "gpt-4-turbo" ] } }

You can replace the 'url' if you have direct access to the OpenAI API.

Alternatively, you can also use another API endpoint that supports function calls:

-

qwen-api.json{ "chatbotStore": { "apiKey": "", "url": "https://dashscope.aliyuncs.com/compatible-mode", "path": "/v1/chat/completions", "model": "qwen-turbo", "max_tokens_value": "", "mcp": true }, "defaultChoiceStore": { "model": [ "qwen-turbo", "qwen-plus", "qwen-max" ] } } -

deepinfra.json{ "chatbotStore": { "apiKey": "", "url": "https://api.deepinfra.com", "path": "/v1/openai/chat/completions", "model": "meta-llama/Meta-Llama-3.1-70B-Instruct", "max_tokens_value": "32000", "mcp": true }, "defaultChoiceStore": { "model": [ "meta-llama/Meta-Llama-3.1-70B-Instruct", "meta-llama/Meta-Llama-3.1-405B-Instruct", "meta-llama/Meta-Llama-3.1-8B-Instruct" ] } }

Build Application

You can build your own desktop application by:

npm run build-app

This CLI helps you build and package your application for your current OS, with artifacts stored in the /artifacts directory.

For Debian/Ubuntu users experiencing RPM build issues, try one of the following solutions:

-

Edit

package.jsonto skip the RPM build step. Or -

Install

rpmusingsudo apt-get install rpm(You may need to runsudo apt updateto ensure your package list is up-to-date)

Troubleshooting

Error: spawn npx ENOENT - ISSUE 40

Modify the config.json in src/main

On windows, npx may not work, please refer my workaround: ISSUE 101

- Or you can use

nodein config.json:{ "mcpServers": { "filesystem": { "command": "node", "args": [ "node_modules/@modelcontextprotocol/server-filesystem/dist/index.js", "D:/Github/mcp-test" ] } } }

Please ensure that the provided path is valid, especially if you are using a relative path. It is highly recommended to provide an absolute path for better clarity and accuracy.

By default, I will install server-everything, server-filesystem, and server-puppeteer for test purposes. However, you can install additional server libraries or use npx to utilize other server libraries as needed.

Installation timeout

Generally, after executing npm install for the entire project, the total size of files in the node_modules directory typically exceeds 500MB.

If the installation process stalls at less than 300MB and the progress bar remains static, it is likely due to a timeout during the installation of the latter part, specifically Electron.

This issue often arises because the download speed from Electron's default server is excessively slow or even inaccessible in certain regions. To resolve this, you can modify the environment or global variable ELECTRON_MIRROR to switch to an Electron mirror site that is accessible from your location.

Electron builder timeout

When using electron-builder to package files, it automatically downloads several large release packages from GitHub. If the network connection is unstable, this process may be interrupted or timeout.

On Windows, you may need to clear the cache located under the electron and electron-builder directories within C:\Users\YOURUSERNAME\AppData\Local before attempting to retry.

Due to potential terminal permission issues, it is recommended to use the default shell terminal instead of VSCode's built-in terminal.

Demo

Multimodal Support

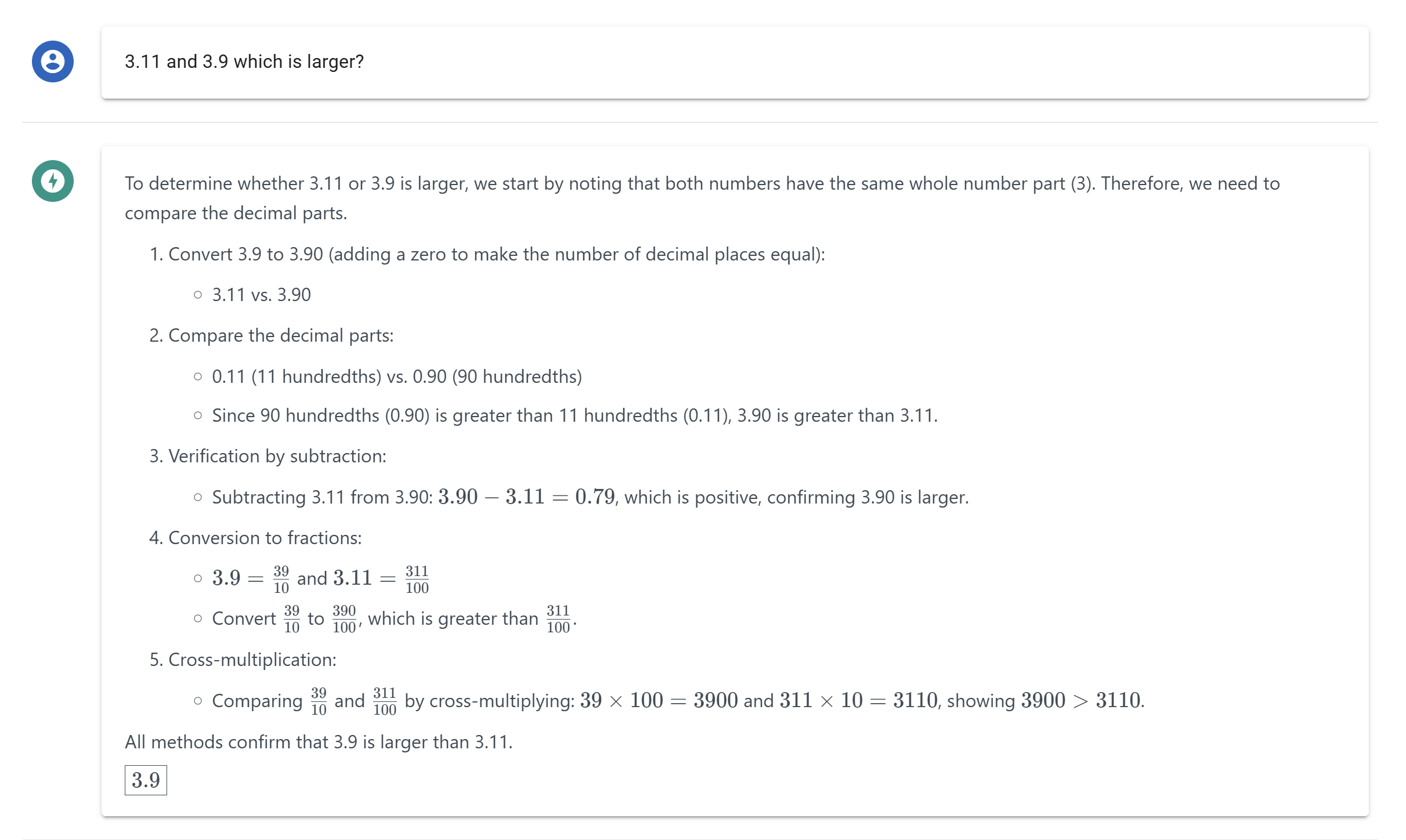

Reasoning and Latex Support

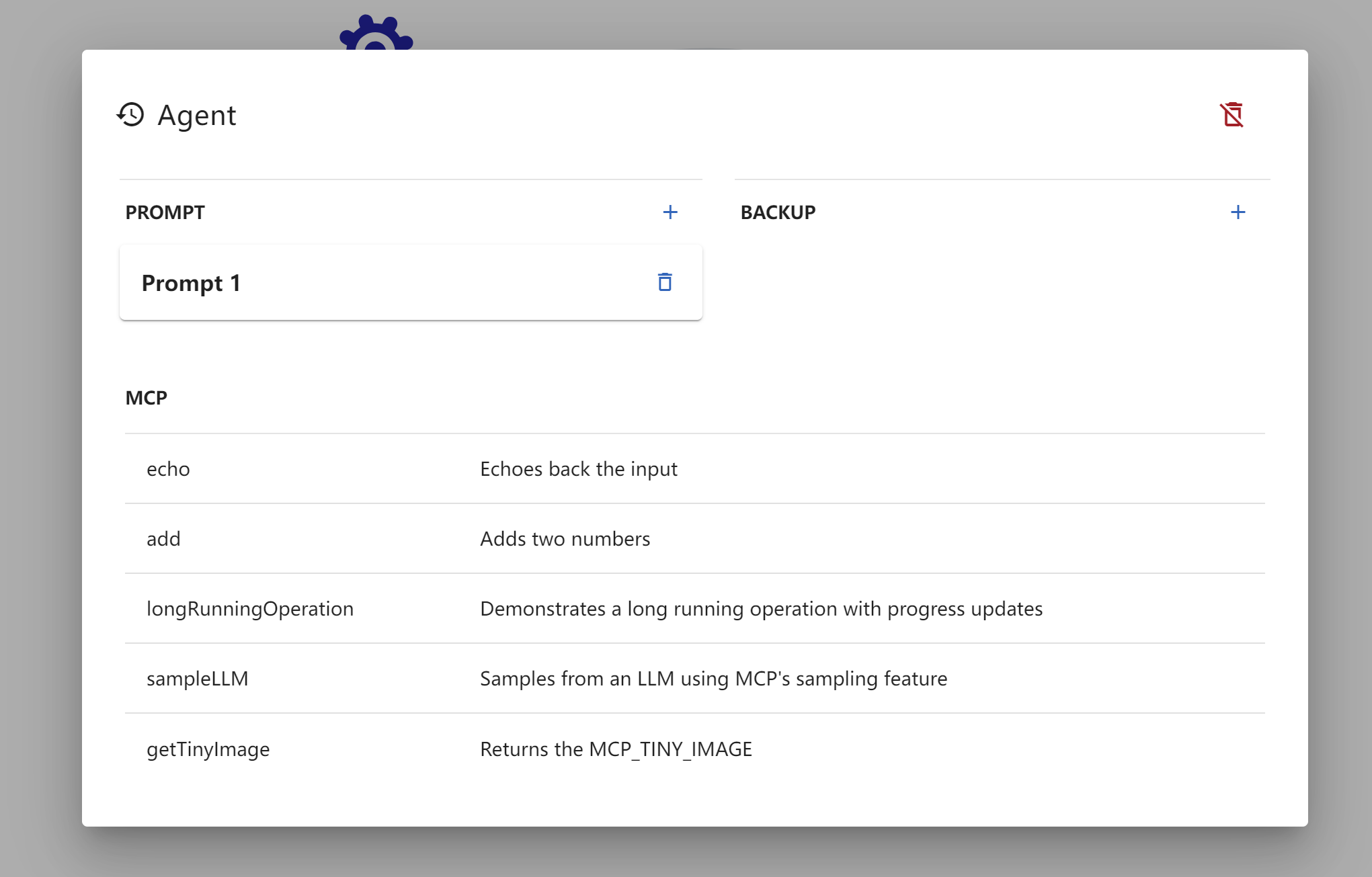

MCP Tools Visualization

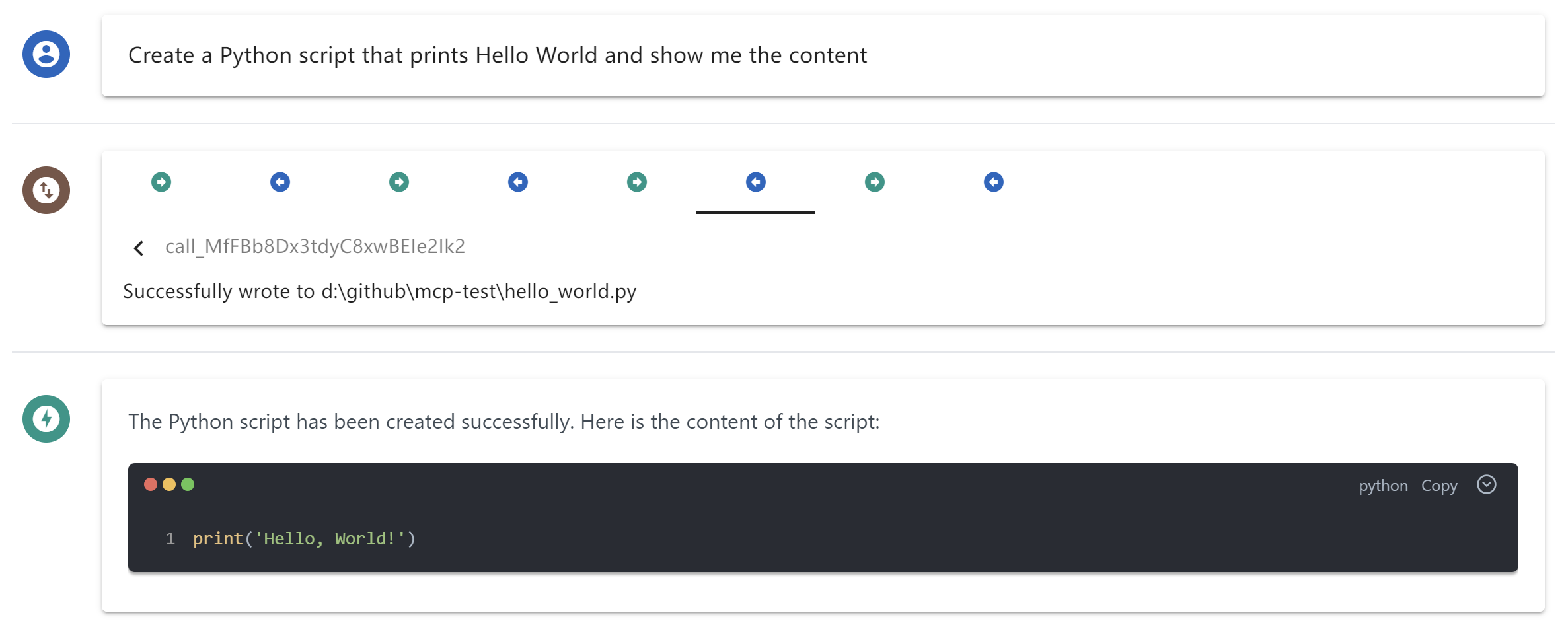

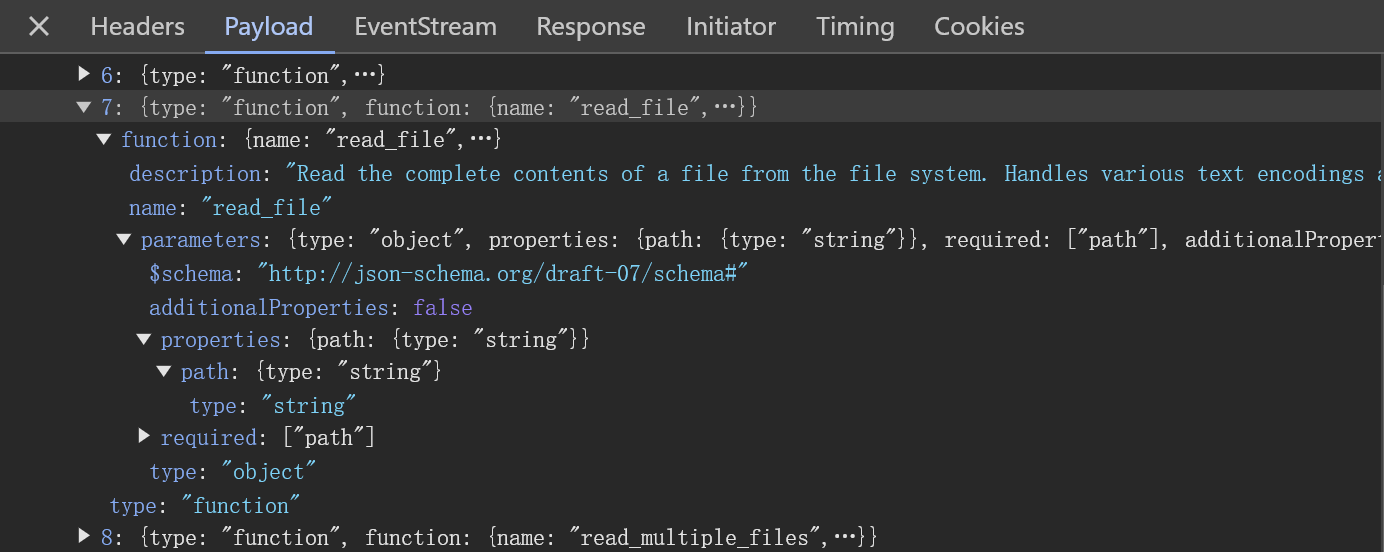

MCP Toolcall Process Overview

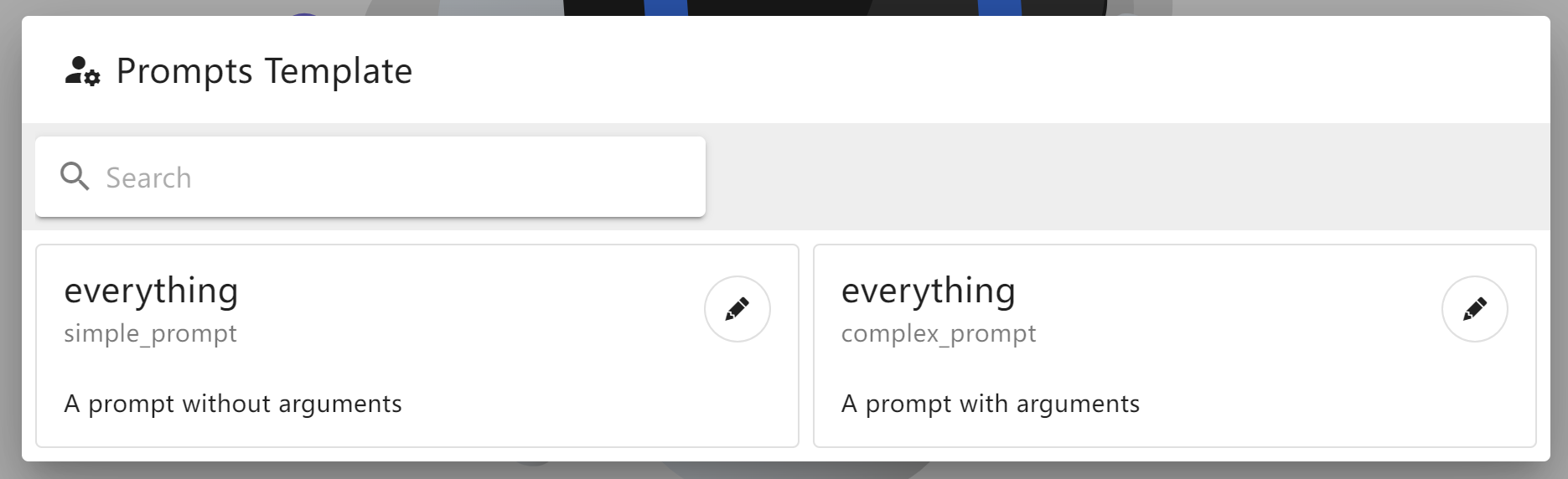

MCP Prompts Template

Dynamic LLM Config

DevTool Troubleshooting

Chat Mcp Reviews

Login Required

Please log in to share your review and rating for this MCP.

Similar MCP Servers like Chat Mcp

Explore related MCPs that share similar capabilities and solve comparable challenges

Git

Officialby modelcontextprotocol

A Model Context Protocol server for Git repository interaction and automation.

Zed

OfficialClientby zed-industries

A high‑performance, multiplayer code editor designed for speed and collaboration.

Everything

Officialby modelcontextprotocol

Model Context Protocol Servers

Time

Officialby modelcontextprotocol

A Model Context Protocol server that provides time and timezone conversion capabilities.

Cline

OfficialClientby cline

An autonomous coding assistant that can create and edit files, execute terminal commands, and interact with a browser directly from your IDE, operating step‑by‑step with explicit user permission.

Context7 MCP

Officialby upstash

Provides up-to-date, version‑specific library documentation and code examples directly inside LLM prompts, eliminating outdated information and hallucinated APIs.

Daytona

by daytonaio

Provides a secure, elastic infrastructure that creates isolated sandboxes for running AI‑generated code with sub‑90 ms startup, unlimited persistence, and OCI/Docker compatibility.

Continue

OfficialClientby continuedev

Enables faster shipping of code by integrating continuous AI agents across IDEs, terminals, and CI pipelines, offering chat, edit, autocomplete, and customizable agent workflows.

GitHub MCP Server

by github

Connects AI tools directly to GitHub, enabling natural‑language interactions for repository browsing, issue and pull‑request management, CI/CD monitoring, code‑security analysis, and team collaboration.